In today's world, music has evolved from a form of entertainment to a personal companion. With the right music, emotions can be amplified or soothed, making it a powerful tool for emotional engagement. Leveraging technology to understand emotions and offer personalized experiences is the next frontier in music. Enter Moodify, an emotion-based music recommendation system that uses facial recognition to detect emotions and suggests songs tailored to the user's mood.

This case study explores the development of Moodify, highlighting the technology stack used and the challenges faced while creating a seamless experience for users. The tech stack included Next.js, Tailwind CSS, NextAuth, face-api.js for facial recognition, and the Deezer API for music recommendations.

The Idea Behind Moodify

The concept of Moodify was born from the idea that music is deeply connected to emotions. Many people choose songs based on their current mood, whether they are happy, sad, energetic, or relaxed. The challenge was how to automate this process by creating an application that could understand human emotions through facial expressions and recommend music without the user having to manually select it.

This idea can significantly enhance user experience by offering a personalized music journey, making it a perfect use case for leveraging AI-powered facial recognition and emotion detection technologies.

Key Features of Moodify

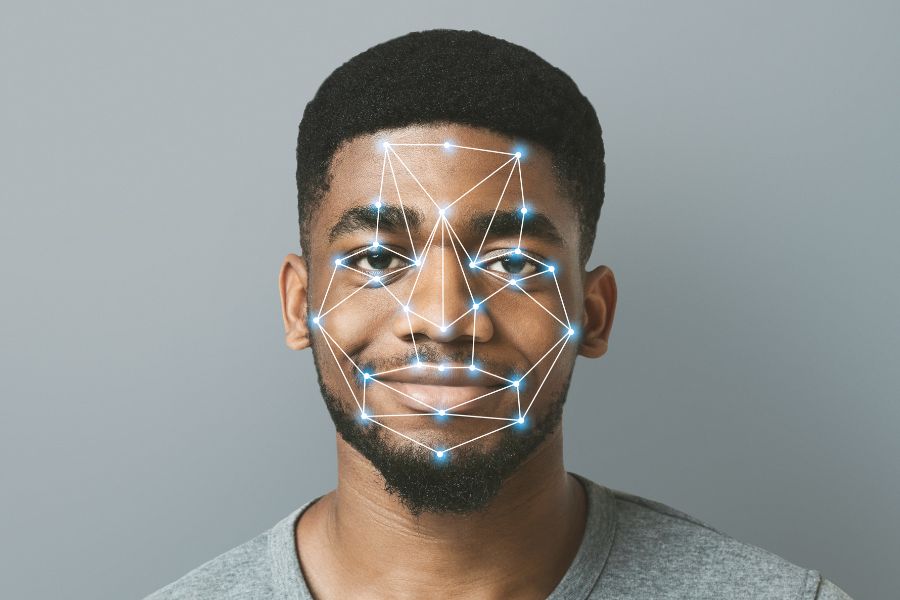

- Facial Emotion Recognition: Moodify scans the user's face and detects their current mood using emotion recognition technology.

- Music Recommendation Based on Mood: Once the emotion is detected, the system connects to the Deezer API to fetch songs that match the user's emotional state.

- User Authentication: Users can log in securely using NextAuth, which offers social logins and secure session handling.

- Seamless User Interface: Built with Next.js and styled with Tailwind CSS, Moodify provides a sleek and responsive interface for users on all devices.

Technology Stack Overview

- Next.js: A React framework that offers server-side rendering and static site generation. Next.js was chosen for its flexibility, ease of development, and the ability to handle complex state management and routing.

- Tailwind CSS: A utility-first CSS framework that allows for rapid UI development. It was used to create a responsive and modern interface for Moodify without the need for writing extensive custom CSS.

- NextAuth: For authentication, Moodify integrated NextAuth, providing multiple authentication methods, including Google and social media logins. This allowed users to easily sign in and save their preferences.

- face-api.js: The facial recognition and emotion detection part of the system was powered by face-api.js, a JavaScript API that runs in the browser and can detect facial expressions in real-time.

- Deezer API: To fetch music recommendations, Moodify utilized the Deezer API. Deezer offers a rich music catalog and allows developers to access tracks, playlists, and artist details via their API.

How Moodify Works: A Technical Breakdown

Step 1: Facial Emotion Detection with face-api.js

At the core of Moodify's emotion-based recommendation system is face-api.js, a library built on top of TensorFlow.js. This library allows for real-time facial expression detection directly in the browser.

To integrate this, the user's webcam is activated once they give permission. face-api.js detects the user's facial landmarks and identifies emotions based on these landmarks. It categorizes emotions into several classes, such as happiness, sadness, anger, surprise, and neutrality.

Step 2: Analyzing Emotion Data

Once the emotion is detected, the system analyzes which emotion has the highest probability. For example, if the probability of "happy" is 0.8 and "neutral" is 0.2, the system recognizes that the user is happy.

This emotional data is then passed on to the next step, where the music recommendation process begins.

Step 3: Music Recommendation with Deezer API

Moodify uses the Deezer API to fetch music recommendations based on the detected emotion. The API allows you to search for songs, artists, and playlists, giving Moodify a wide range of options to tailor the perfect playlist based on a user's mood.

For example:

- Happy Mood: The app searches for upbeat and lively tracks.

- Sad Mood: The app fetches more mellow and calming songs.

- Energetic Mood: Songs with fast tempos and higher intensity are selected.

Step 4: Displaying the Playlist

The combination of real-time facial recognition and dynamic playlist generation provides a unique and personalized experience, which is the core appeal of Moodify.

Challenges and Solutions

1. Real-Time Facial Recognition

2. Integrating Deezer API

3. Authentication

The User Experience

- The user visits the website and signs in using NextAuth.

- They grant permission to access the webcam.

- The app scans the user's face in real-time and detects their mood.

- Based on the emotion, the app fetches a playlist using the Deezer API.

- The playlist is displayed, and the user can immediately start listening to songs.

Conclusion

Moodify demonstrates how technology can enhance everyday experiences like listening to music by making them more personalized and emotionally engaging. By combining facial recognition technology with music recommendation APIs, Moodify offers a seamless and intelligent way to match users' moods with the right music.

Through the use of Next.js, Tailwind CSS, NextAuth, face-api.js, and the Deezer API, Moodify showcases the potential of modern web technologies to deliver innovative and interactive solutions in the music industry. The development process also highlighted the importance of optimizing for performance, API usage, and user authentication for a smooth and enjoyable experience.